KASPA BENEFITS & Features

Kaspa solves the trilemma in the usage of digital assets: Security, Scalability, and Decentralization. Utilizing a revolutionary blockDAG as opposed to blockchain, Kaspa allows the fastest, scalable, and most secure transactions with absolutely no sacrifice to decentralization.

Fastest Transactions

Kaspa’s blockDAG network generates 10 blocks every second for posting transactions to the ledger. Combined with fully confirmed transactions in 1 second, this makes Kaspa ideal for everyday transactions.

Instant Confirmation

Kaspa was designed to be hundreds of times faster than Bitcoin, with each Kaspa Transaction confirmed to the network in one second, and each transaction fully finalized in 10 seconds on average.

Scalable Nakamoto Consensus

Efficient Proof-of-Work

Security

BlockDAG

Kaspa on RUST

Kaspa was rewritten from Go to Rust. Rust language emphasizes performance, type safety, and concurrency; boosting Kaspa’s overall potential speed to 10bps. This rewrite is an integral part of Kaspa’s future goal of reaching 100bps!

Fair Launch

November 7, 2021

No pre-mine, no ICO, no pre-sales, and no coin allocations, ensuring a fair distribution to all participants from the start, adhering to a pure Nakamoto ethos.

GhostDAG

Kaspa improves upon the PHANTOM protocol with GhostDAG, a secure, efficientconsensus mechanism ensuring reliable and irreversible transaction ordering.

Scalable

The blockDAG architecture of Kaspa allows large amounts of transactions within very short periods of time, with many blocks being created simultaneously and at an average rate of 1bps.(10bps coming)

Pruning

Kaspa’s pruning strategy maintains a compact blockDAG, requiring minimal storage hardware, lowering the cost of entry, encouraging decentralization and inclusivity.

Core Features & Technology

Kaspa is a revolutionary advancement in technology based on years of academic and theoretical research.

Scalable Nakamoto Consensus

The GHOSTDAG protocol, developed by the Kaspa founder and implemented in Kaspa, is the only decentralized protocol that fully solves the trilemma. GHOSTDAG enables the best possible throughput and the shortest possible confirmation times, combining proof-of-work grade security and decentralization with performance comparable to state-of-the-art proof-of-stake networks. The upcoming DAGKnight update will provide the first protocol whose confirmation times adapt to network conditions, providing new levels of responsiveness never achieved before.

In 2014, Vitalk Buterin made an observation about the current state of the art of decentralized consensus: that it seems to force the designer to choose between scalability, security, and decentralization. This observation was coined the block chain trilemma.

While some people revere the trilemma as a fundamental law of the universe, others, including Vitalk himself, see it as a contemporary observation, and set out to solve it.

Since 2014, there have been many attempts to solve the trilemma. Some turned out to be broken, while others only partially solved the trilemma by trading-off some of the security properties of Nakamoto consensus: some replace proof-of-work with variants of proof-of-stake, reducing security and decentralization, some increase throughput at the cost of increasing the latency (usually common in parallel-chain architectures where the confirmation time increases with the number of chains), some propose weaker forms of transaction finalization, etc.

The GHOSTDAG protocol, implemented in Kaspa, is the only implemented protocol that fully solves the blockchain trilemma.

GHOSTDAG is a blockDAG architecture, which means that there are (almost) no limitations on how blocks are allowed to interconnect. This allows reading off much more information than the resulting graph, reaching faster and more reliable conclusions about the state of the network and which blocks should be considered honest. GHOSTDAG seeks a pattern called a k-cluster, which is a set of blocks where almost all blocks are “aware” of each other, and the amount of “parallel” blocks each block has is limited to k. This approach furnishes a protocol that provably provides Nakamoto consensus security, based on the observation that honest blocks are organically arranged in a k-cluster, whereas any attacker who withholds blocks will necessarily be excluded from this cluster.

Importantly, the security of the protocol does not degrade with parallel blocks, as they are not orphaned, allowing arbitrary increase in the block creation rate, limited only by the hardware capabilities of nodes. Currently the goal is to operate with nodes running on $100 hardware, and under these impositions GHOSTDAG has been successfully running in 3000 TPS over 10 BPS on the testnet, and the increased block rate will soon be released to mainnet with the Crescendo hard-fork.

Resources: GHOSTDAG Whitepaper, GHOSTDAG 101 Workshop

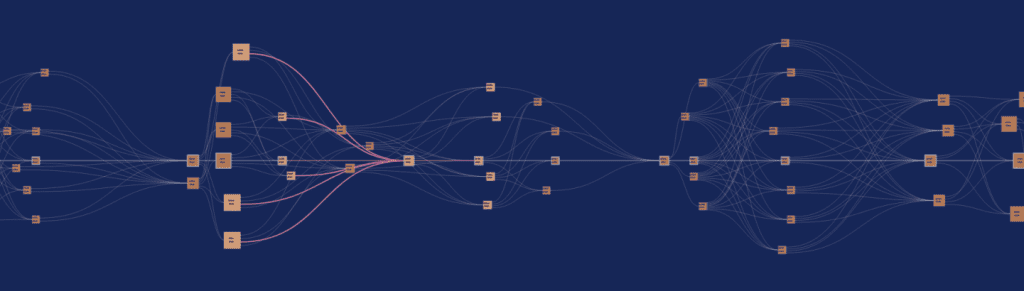

BlockDAG

Kaspa is the first blockDAG cryptocurrency (no blockchain). A blockchain is a linear system, where transactions are recorded in blocks, which are ordered by time and sealed.

Kaspa is the first blockDAG cryptocurrency (no blockchain). A blockchain is a linear system, where transactions are recorded in blocks, which are ordered by time and sealed.

A blockDAG is a directed acyclic graph- a mathematical structure where the vertices represent blocks & edges reference child/parent blocks. This novel implementation of distributed ledgers is the first of its kind – permitting next-generation scalability, high throughput transactional bandwidth, instantaneous confirmations, while remaining decentralized.

The term “DAG” stands for Directed Acyclic Graph, a mathematical concept for a directed graph with no directed cycles. It consists of vertices and edges, with each edge directed from one vertex to another. In the context of distributed ledgers, BlockDag is a DAG whose vertices are blocks, and edges are references from blocks to their predecessors. The blockDAG seeks to solve the problems with linear blockchains and Nakamoto consensus. These problems include lack of scalability, selfish mining, and orphan blocks. Unlike a traditional blockchain, blockDAGs may reference multiple predecessors instead of a single predecessor and blocks reference all tips of the graph instead of just referencing the tips of the longest chain. By referencing all tips of the graph, a blockDAG incorporates blocks from different branches instead of just referencing the longest chain.

The term “DAG” stands for Directed Acyclic Graph, a mathematical concept for a directed graph with no directed cycles. It consists of vertices and edges, with each edge directed from one vertex to another. In the context of distributed ledgers, BlockDag is a DAG whose vertices are blocks, and edges are references from blocks to their predecessors. The blockDAG seeks to solve the problems with linear blockchains and Nakamoto consensus. These problems include lack of scalability, selfish mining, and orphan blocks. Unlike a traditional blockchain, blockDAGs may reference multiple predecessors instead of a single predecessor and blocks reference all tips of the graph instead of just referencing the tips of the longest chain. By referencing all tips of the graph, a blockDAG incorporates blocks from different branches instead of just referencing the longest chain.

Thus, in a BlockDAG, no blocks are wasted or orphaned, all blocks are published to the ledger, and no mining power is wasted. Due to this structure, blocks are allowed to be generated more frequently, facilitating more transactions, and resulting in almost instant confirmations. How is this all possible? By deviating from the longest chain consensus method, and leveraging Kaspa’s novel GhostDAG (Phantom 2.0) consensus mechanism.

BlockDAGs are superior to singular or parallel block chains due to the maximal revelation principle. When you mine a block, and share all the tips of the blocks you are aware of, you maximize the information you send.

GhostDAG

Kaspa’s GhostDAG protocol refines DAG-based technology to improve scalability and security while maintaining fair consensus. Unlike traditional DAG systems, which struggle to determine the main chain, GhostDAG assigns probabilities to blocks for fair inclusion and rewards. It also uses k-sets for efficient consensus and manages cases where multiple blocks are created simultaneously. This approach enables Kaspa to achieve Nakamoto Consensus, ensuring a secure and consistently agreed-upon transaction history without relying on a traditional linear blockchain.

Kaspa’s GhostDAG protocol refines DAG-based technology to improve scalability and security while maintaining fair consensus. Unlike traditional DAG systems, which struggle to determine the main chain, GhostDAG assigns probabilities to blocks for fair inclusion and rewards. It also uses k-sets for efficient consensus and manages cases where multiple blocks are created simultaneously. This approach enables Kaspa to achieve Nakamoto Consensus, ensuring a secure and consistently agreed-upon transaction history without relying on a traditional linear blockchain.

GhostDAG is the consensus mechanism which Kaspa currently utilizes and improves upon the PHANTOM consensus. PHANTOM requires solving an NP-hard problem, and is not suitable for practical applications on its own. Instead, we use the intuition behind PHANTOM to devise a greedy algorithm, GHOSTDAG, which can be implemented more efficiently. We prove formally that GHOSTDAG is secure, in the sense that its ordering of blocks becomes exponentially difficult to reverse as time develops. The main achievement of GHOSTDAG can be summarized as follows: Given two transactions tx1, tx2 that were published and embedded in the blockDAG at some point in time, the probability that GHOSTDAG’s order between tx1 and tx2 changes over time decreases exponentially as time grows, even under a high block creation rate that is non-negligible relative to the network’s propagation delay, assuming that a majority of the computational power is held by honest nodes.

GhostDAG is the consensus mechanism which Kaspa currently utilizes and improves upon the PHANTOM consensus. PHANTOM requires solving an NP-hard problem, and is not suitable for practical applications on its own. Instead, we use the intuition behind PHANTOM to devise a greedy algorithm, GHOSTDAG, which can be implemented more efficiently. We prove formally that GHOSTDAG is secure, in the sense that its ordering of blocks becomes exponentially difficult to reverse as time develops. The main achievement of GHOSTDAG can be summarized as follows: Given two transactions tx1, tx2 that were published and embedded in the blockDAG at some point in time, the probability that GHOSTDAG’s order between tx1 and tx2 changes over time decreases exponentially as time grows, even under a high block creation rate that is non-negligible relative to the network’s propagation delay, assuming that a majority of the computational power is held by honest nodes.

Similar to PHANTOM, the GHOSTDAG protocol selects a k-cluster, which induces a coloring of the blocks as Blues (blocks in the selected cluster) and Reds (blocks outside the cluster). However, instead of searching for the largest k-cluster, GHOSTDAG finds a k-cluster using a greedy algorithm. The algorithm constructs the Blue set of the DAG by first inheriting the Blue set of the best tip Bmax i.e., the tip with the largest Blue set in its past, and then adds to the Blue set blocks outside Bmax’s past in a way that preserves the k-cluster property.

Observe that this greedy inheritance rule induces a chain: The last block of the chain is the selected tip of G, Bmax; the next block in the chain is the selected tip of the DAG past (Bmax); and so on down to the genesis. We denote this chain by Chn(G) = (genesis =Chn0(G), Chn1(G), . . . , Chnh(G)). The final order over all blocks, in GHOSTDAG, follows a similar path as the colouring procedure: We order the blockDAG by first inheriting the order of Bmax on blocks in past (Bmax ), then adding Bmax itself to the order, and finally adding blocks outside past (Bmax ) according to some topological ordering. Thus, essentially, the order over blocks becomes robust as the coloring procedure.

Instant Confirmations

Kaspa is the only proof-of-work capable of providing confirmation times as fast as the internet allows, without compromising the security of the network or the end user. It is the only way to obtain security equivalent to Bitcoin at speeds comparable to the fastest proof-of-stake protocols.

Kaspa is the only proof-of-work capable of providing confirmation times as fast as the internet allows, without compromising the security of the network or the end user. It is the only way to obtain security equivalent to Bitcoin at speeds comparable to the fastest proof-of-stake protocols.

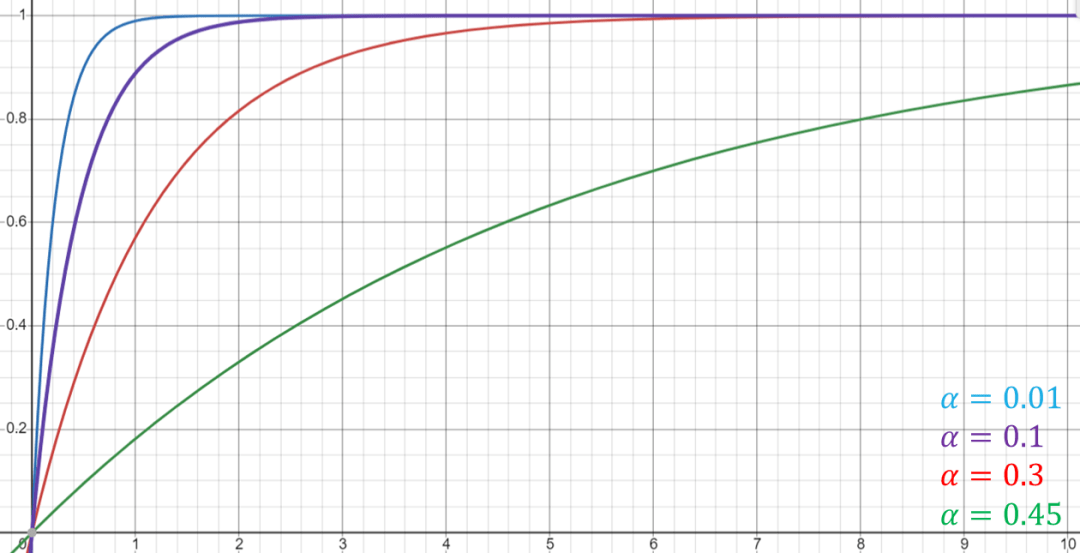

Mathematically speaking, “confirmation times” mean how fast the probability of a transaction reverting goes to zero as a function of the number of confirmations it accumulated, assuming an honest majority. For example, Bitcoin’s “six block rule” provides 92% security against a 40% attacker, and 99.4% security against a 30% attacker. Generally speaking, the formula for security against an attacker with a fraction of α<½ after C confirmations is bounded by (α/(1-α))^C (there is also an explicit term in the literature but it is very complicated). The following graph (ignoring the discrete nature of block creation) illustrates how fast the confidence grows with C for several values of α.

In particular, no proof-of-work, not even Bitcoin, can provide security against α that is very close to 0.5. In Kaspa, we get to choose the security threshold and set the system parameters to induce security up to that threshold. Currently, Kaspa is parametrized to provide Bitcoin-like confirmation scaling, assuming there is no attacker with more than 47.5% of the global hashrate.

Something interesting to note about how confirmation times scale down in the honest majority setting is that it is only measured in the number of confirmations, and is agnostic to the time it took to create each block. Since Kaspa blocks accumulate confirmations in internet speed, its transactions are confirmed in internet speed.

The remaining loose end is what happens in the honest minority case. The defense against this scenario (in any proof-of-work) is to make 51% attacks impractical. In ASIC minable coins, this is achieved by hardware scarcity. As the global hashrate increases, and more miners enter the scene, it becomes much harder and more expensive to procure hardware capable of mining Kaspa. It is infeasible to lease enough hardware to reach a majority for a short time, forcing attackers to accumulate a lot of expensive hardware – driving ASIC prices up in the process – making such attacks extremely expensive (current estimations are in the hundreds of millions of dollars, ignoring the increase in ASIC price as a result of buying pressure).

Resources: The two types of security (Tweet)

GD101 reference chapter link coming

Scalibility

Kaspa solves the scalability problem with its ability to generate and confirm multiple blocks per second. This comes with no trade-off to security and decentralization as seen with Proof-of-Stake networks.

Kaspa solves the scalability problem with its ability to generate and confirm multiple blocks per second. This comes with no trade-off to security and decentralization as seen with Proof-of-Stake networks. Having a fast block ledger with instant confirmations is very important for users, but how many people and transactions can the ledger handle at a single time? Could thousands of people transact at the same time with no impact on speed or confirmation? What about hundreds-of-thousands of people? How about millions to billions of people? These questions highlight the scalability problems inherent with most blockchains, meaning that they get clogged or don’t function as designed when a large number of users and transactions occur within a short period of time.

Having a fast block ledger with instant confirmations is very important for users, but how many people and transactions can the ledger handle at a single time? Could thousands of people transact at the same time with no impact on speed or confirmation? What about hundreds-of-thousands of people? How about millions to billions of people? These questions highlight the scalability problems inherent with most blockchains, meaning that they get clogged or don’t function as designed when a large number of users and transactions occur within a short period of time.

Thanks to the blockDAG design of Kaspa, we are better able to handle large amounts of transactions within very short periods of time, with multiple numbers of blocks being created simultaneously and at an average rate of one block every second (wow that’s a lot of blocks!). This allows us to pack in a large number of transactions in very short durations of time. This is singularly unique for a true and purely decentralized proof-of-work network.

Fair Launch

Kaspa has no preallocations whatsoever, 100% of Kaspa was fairly mined in competitive terms. But that is just one of the measures taken to ensure a fair coin spread. The combination of rapid emissions and a new hashing algo (that had no ASICs, or even GPU/FPGA software, available) ensured that the mining was initially competitive for CPUs, followed by an epoch of GPU mining where more than 60% of the coins were mined. Since GPU miners have very high operational costs, this caused Kaspa emissions to spread well.

Kaspa has no preallocations whatsoever, 100% of Kaspa was fairly mined in competitive terms. But that is just one of the measures taken to ensure a fair coin spread. The combination of rapid emissions and a new hashing algo (that had no ASICs, or even GPU/FPGA software, available) ensured that the mining was initially competitive for CPUs, followed by an epoch of GPU mining where more than 60% of the coins were mined. Since GPU miners have very high operational costs, this caused Kaspa emissions to spread well.

The efforts to make the launch as fair as possible were multi-pronged. They started before the testnet was launched by encouraging peers and the public to form an open community in Discord. After the testnet launched, a few press releases were made to draw in more followers, as well as posts by contributors in all social media available to them. At the time of launch the Kaspa Discord server was more than 100 members strong, and following the launch it rapidly increased, reaching 1000 members within less than two weeks, and that trend has been steady for the first few months.

During the first two weeks of the network, there was a randomized emission rule that gave each block an average reward of 750 kas. The rule was then removed and replaced with a fixed 500 reward. Exactly six months after launch, the network transitioned to the chromatic phase where the block reward is reduced every month by about 5.6%, accumulating to a yearly halving. This rapid emission ensured that by the time ASIC miners were announced, most of the coin was already in circulation. This is desirable since GPU miners have much higher operational costs, forcing them to sell more of their yield, helping the spread of the coin.

The mining algorithm itself was chosen in a community poll to be the kHH algorithm, a variant of the HeavyHash algorithm modified just enough so that existing GPU/FPGA software (developed by projects using HH such as OBTC) would not be able to mine Kaspa. Indeed, a GPU miner emerged from community developers less than a month after launch.

The fairness of the launch is also apparent from the difficulty graph, showing constant increase since the first days.

Security

Kaspa harnesses an ultra secure block network with no compromise to decentralization. Achieved with pure, stake-less, proof-of-work combined with a revolutionary GhostDAG consensus mechanism.

Kaspa harnesses an ultra secure block network with no compromise to decentralization. Achieved with pure, stake-less, proof-of-work combined with a revolutionary GhostDAG consensus mechanism. Kaspa employs the same security principles and methodology as bitcoin besides replacing SHA-256 PoW encryption with kHeavyHash. kHeavyHash, which inherits all the security properties of SHA-256, is a modification of the SHA-256 hashing algorithm which also includes a weighting function.

Kaspa employs the same security principles and methodology as bitcoin besides replacing SHA-256 PoW encryption with kHeavyHash. kHeavyHash, which inherits all the security properties of SHA-256, is a modification of the SHA-256 hashing algorithm which also includes a weighting function.

Thus, the blockDAG is secured by a robust network of decentralized volunteers (miners) who validate and sign transactions. Like Bitcoin, Kaspa is also fully decentralized and permissionless. Anyone can participate and anyone can help secure the network.

Efficient Implementation of RUST

The Rusty-Kaspa client is a marvel of research and engineering. Initiated by former DAGLabs employee Michael Sutton, Rusty-Kaspa is developed and maintained by a global team of volunteering contributors spanning 16 countries. The Rusty-Kaspa client is extremely efficient, allowing for high-throughput operation on cheap hardware.

The Tesnet 11 network has been running for months flawlessly, operating at rates going between 2400 and 3000 transactions per second, over 10 BPS. Testnet 11 is an open network, and people successfully run full nodes on Raspberry Pis, decade-old laptops, and mid-tier mobile phones. The 10BPS update is set to be hard-forked into mainnet in the following weeks as part of the Crescendo hard-fork.

Solving the trilemma means that the security of the protocol is no longer a bottleneck of its throughput. Consequently, we can increase the throughput arbitrarily without harming the security of the protocol. However, increasing the throughput does have other consequences: it increases the hardware requirements for nodes. This is the best possible scenario since hardware limitations are the only throughput bottleneck that is actually imposed by the laws of physics.

Thus, having solved the trilemma puts us in a unique position: we get to choose the hardware limit for a node, and increase the throughput within this limit. This stresses the importance of an efficient implementation, as it would allow greater throughputs within the same hardware limitation.

With that in mind, Michael Sutton set out to rewrite the entire codebase better. The original Golang client is by no means a simple feat, but the development process brought with it a mountain of wisdom that could be applied from the get-go in a complete overhaul. The Rust language was chosen as it is suitable for performance-oriented development – and especially shines in contexts that require a lot of parallelization and disk access.

There are many engineering challenges that arise when trying to implement GHOSTDAG efficiently. Some are unique to GHOSTDAG, while others are general to high-throughput systems. Some were solved already in the original Golang client, and some were introduced or improved in the Rusty-Kaspa implementation.

For example, consider reachability queries. We want to devise an algorithm that, given two blocks, determines if one is in the past of the other. This action is performed many times when computing the GHOSTDAG protocol, so it is very important that it is implemented efficiently. This turns out to be very hard. The naive approach of just starting some graph traversal from the first block until hitting the second block or below takes too much time, and the naive approach of storing, for each block, its entire past, takes too much space. Finding a compromise that is both computationally and spatially reasonable (or even proving it is possible) is a long-standing (if not too prominent) open problem in computer science. Implementing Kaspa required finding a partial solution to the problem. It does not solve the problem in the general case, but it does work perfectly for the narrow DAGs created by GHOSTDAG.

Low Storage Solutions

In a high throughput system, it is crucial to find secure ways to avoid data accumulation. Otherwise, storage requirements for participation increase rapidly, and with them the storage requirements for running nodes, and the time it takes a new node to sync into the network.

For this reason it was important to us to find a way to keep storage requirements constant without sacrificing security or decentralization. The solution we found is a combination of three innovations:

- A novel data pruning algorithm for blockDAGs, allowing to discard all block data but a constant size suffix (which is currently set to three days)

- An adaptation of the Mining in Logarithmic Space protocol (Kiayias, Leonardos, Zindros) to DAGs

- A novel quadratic mass formula mechanism that seamlessly limits state bloat

- Cryptographic receipts, a mechanism for generating an indefinitely verifiable proof that a transaction was accepted

With these combined solutions, the network can process its full capacity of 3000 TPS indefinitely with the required storage never exceeding 200 GB, with no security compromises or reliance on archives.

Relying on the availability of the entire ledger is bad. It means that the only way to verify the consistency of the chain is to obtain a copy of a rapidly growing dataset. This makes maintaining and syncing a node much harder and slower. Bitcoin proposes a partial solution: allow some of the nodes to be pruned, but require validating all the ledger at sync times. So while pruned nodes do not require a lot of space during normal operation, they still face a long and exerting syncing process that can take up to a week. Additionally, as more nodes opt to run in pruned mode, the availability of “sources-of-truth” required to sync a new node decreases, centralizing the network.

The reason the ledger data is even needed is to avoid history rewriting attacks. If we only keep the last day of ledger data, a very weak adversary can create something that looks like a one-day long piece of the ledger, but actually took months or even years to compute. In other words, using just the last day of ledger data does not allow us to verify the weight of the entire chain in order to properly apply the heaviest chain rule. The most straightforward solution is to keep all block headers (even if block data is pruned). In high throughput systems, this is still prohibitive, since even header data accumulates very quickly. In 10BPS, this comes up to about 32GB per year.

In 2020, Kiayias-Leonardos-Zindros proposed the mining in logarithmic space (MLS) protocol: a protocol that allows discarding almost all of the old block data and instead storing a “proof of proof-of-work” that is as hard to forge as creating a full competing chain. The proof is much smaller than the entire history of headers, and will take more than 10,000 years to reach a size of 100MB. Kaspa uses a DAG adaptation of the MLS protocol.

Consequently, all Kaspa nodes can act as sources of truth for syncing a node, providing better decentralization. Since the full ledger is not required to verify the chain, syncing nodes is much lighter and takes as little as 20 minutes.

One consequence of using MLS is that Kaspa nodes do not provide an immutable ledger. In high-throughput systems, requiring each node of the network to validate the entire history for all eternity is impractical. Instead, we propose the notion of cryptographic receipt. A cryptographic receipt for a transaction can only be computed as long as the transaction is not yet pruned (or with the help of an archival node). However, a receipt that was created in time can be verified forever by all nodes in the network, as it does not require any of the pruned data.

While archival nodes are not required for the operation of the network, there are other reasons to maintain an archive. For example, block explorers maintain an archive to provide data on old transactions and blocks. Archival nodes could also provide a paid service of retroactively creating a cryptographic receipt for old transactions. There are several archival nodes of the Kaspa network maintaining all of the ledger data (barring a few weeks during the inception of the network).

Besides the ledger data, there is also the state data (e.g. the UTXO set). The state data represents all the information required to understand the current consensus, and is stored separately from the ledger data. If for example many different outputs with residual values are created, they will remain in the UTXO set, increasing storage requirements forever (as long as they are not spent by their owner). This is the modus operandi of dusting attacks such as the one Kaspa faced in September 2023.

The available solutions for (organic and malicious) state bloat at the time were unsatisfactory. Stateless clients (i.e. accumulator based) solutions require users to remain online and rely on data that is incompatible with HD wallets, considerably degrading user experience, while demurrage based solutions contradict the basic ethos of cryptocurrency: that only the owner of the key has any access to the money.

To fill this gap, the Kaspa devs came up with an innovative solution called the harmonic mass formula. This solution is unique in the sense that it does not require changing the way transactions are verified or spent, or their retention policy. (It is in fact so non-intrusive, that it was initially implemented on the P2P layer alone. It will only be enforced by consensus after the Crescendo hard-fork). It restructures the “weight” of a transaction (in terms of the block space it requires) in such a way that the amount of block space wasted by an attacker, no matter how they structure their transactions, will be quadratic in the amount of state space they waste. In times of high usage, the network fees will make the attack extremely expensive, whereas in times of low usage, the throughput of the network will naturally limit state bloat.

References:

- MLS paper

- Adaptation of MLS to DAGs

- Data pruning for DAGs

- Manual verification of Kaspa chain (step-by-step guide)

- Cryptographic Receipts (KIP6)

- Quadratic Mass Formula (KIP9)

Resources:

- NBX Warsaw Node Storage Workshop

- Block Data pruning in DAGs: GHOSTDAG 101 (second half)

- MLS: Coauthor Zindros interview on Ergo Cast

- Cryptographic Receipts (tweet)

- A Very Dusty Yom-Kippur (dust attack post-mortem)

- Mr. Sutton’s Fabulous Formula (Twitter post)

Decentralized ASIC Mining

Run the Cescendo 10bps v 1.0.0 Node

ASIC mining tends to be perceived as “more centralized” than GPU mining, but that is not quite the case. So-called “ASIC resistance” leads to more centralization in the long run. If there is enough economic incentive to develop it, designated hardware always wins. When it does, it is the “ASIC resistant” algos that become decentralized: the network is ASIC dominated, but the “resistance” makes the ASICs energetically inefficient and costly to produce, raising both capital and operational expenses for miners, making the mining market much less accessible.

Just as important, ASIC based mining is more secure, since designated hardware is much harder to direct towards other purposes, and using a highly ASIC friendly algo makes it profitable to keep online even in times of lower usage.

The concern with ASIC mining is that corporate players will squander most of the coin, Kaspa has several features to address this:

- The hashing algo was chosen in a community poll a day prior to launch, the algo was modified to render existing FPGA/GPU software unusable

- A rapid emission schedule that assured that by the time ASICs hit retail, around 70% of the coin has been mined (similarly to Bitcoin)

- The high BPS of Kaspa scales down the entry barriers for small miners, making home Kaspa mining competitive and popular even when the total Kaspa mining market boats operational expenses nearing billion dollars

It is a deeply rooted misconception that GPU mining is more decentralized than ASIC mining. It is true that decentralizing ASIC mining is trickier, but it is far from impossible. The key ingredients for maintaining mining decentralization are high block rates and an ASIC friendly algo. The main concern about employing an ASIC friendly algo is the entry barrier for early adopters, that can harm the coin spread. This can be (and has been, in Kaspa) mitigated by using a modified algorithm for which no hardware exists yet, and employing a rapid emission schedule assuring that by the time ASICs hit retail, most of the coin would have been mined by GPU miners (who are more pressured to sell due to much higher operational costs)

Kaspa has been CPU mined for several weeks before the community developed GPU miners, and by the time IceRiver ASICs first hit the market, almost 70% of the coin had been mined.

The discussion about mining policy is nuanced, so let us list a few important takeaways:

The first takeaway is that high block rates decrease entry barriers. The Bitcoin network creates 144 blocks a day. This means that there could be at most 144 solo miners or pools that see daily revenue. In 10 BPS, Kaspa will provide 864000 blocks per day, greatly increasing this number. High block rates imply that much smaller mining operations can still see a steady income. This is evident in a trend that is very unique to Kaspa: while in other coins, new generations of miners make the previous generation obsolete, the smallest Kaspa miners are still extremely popular. Newer versions of the home-mining-oriented KS0 are constantly produced and sold out. At the time of writing, the cost of having enough mining power to solo-mine with a daily income (assuming 10BPS) is around one thousand dollars. In brief: mining is an economy of scale, but it scales inversely proportional to the block rate.

The second takeaway is that designated hardware always wins. As seen with e.g. Litecoin’s Scrypt, if your coin is valuable enough, someone will eventually figure out a way to build ASICs that blow generic hardware like GPUs and FPGAs out of the competition. This is an inevitability (and in fact the reason why several algos are claimed to be “ASIC resistant” but none claim to be “ASIC proof”). ASIC resistance a lose-lose: on one hand, the network will eventually become ASIC dominant, but on the other, the ASIC “resistance” will make ASICs require more energy, produce more heat and noise, have shorter life-spans, etc., making them much less accessible than miners for ASIC friendly algorithms. A funny tidbit is that the ASIC friendliness of Kaspa’s mining algorithm kHH is actually one of the things that drew GPU miners in: they noticed that mining Kaspa requires much less energy and cooling and deteriorates GPUs much more slowly, increasing its profitability.

The third takeaway is that ASICs provide reliable security. We expect all players to strive to maximize their profit. For GPU miners this usually means hopping from one coin to the next based on profitability. There is a plethora of GPU minable coins, all competing for the same pool of GPU miners, coupling hash rate to market trends and harming the hash rate stability, harming the stability of the network. ASICs, especially very energy efficient ones, provide much more stability. It is true that there could be several coins that mine the same algo (in particular, the few Kaspa forks that use kHH), but a much larger pool of coin has significant stability, especially for the largest coin using that algo (at the time of writing, Kaspa generates more than 99.7% of all kHH mining). The low operational costs afforded by kHH’s ASIC friendliness make sure it is almost never profitable to switch ASICs off, providing further stability.

The fourth takeaway is that energy-intensive mining harms decentralization. In GPU mining, energy expenses are a much more dominant fraction of the mining costs, making GPU mining much more profitable to people with access to cheap energy. This gives an unfair advantage to people who live in certain areas or run operations large enough to justify a private energy deal. For many people, the energy cost of GPU mining is a non-starter. In ASIC mining the hardware cost itself makes for a much larger fraction of the mining costs, dramatically reducing the effect of energy costs on the competitiveness of mining (and this effect is further increased for ASIC-friendly algos).

The fifth takeaway is that GPU mining requires spending. Since GPU mining has a lot of operational costs, miners are typically forced to sell a lot of the coin they mine to cover costs, giving the coin a better spread. For this reason, it is very healthy for a coin to have most of its circulation mined by GPU miners. To enjoy the benefits of both GPU and ASIC mining, Kaspa was launched with a modified version of the HH algorithm called kHH, and implemented a rapidly decreasing emission schedule. The former provided a long period of GPU dominance, while the latter guaranteed that by the time ASIC dominance was achieved most of the coin would already be in circulation.

ASIC mining can become very centralized, but by carefully picking out the reasons this should happen, recognizing the huge role of high BPS as a countermeasure, and coming up with sensible ways to avoid them, Kaspa has managed to create a mining market that is ASIC dominant while remaining extremely decentralized, arguably more than most GPU mined coins.

Better fee Market

The parallelism of blocks has surprising positive effects on the fee market. Roughly speaking, it incentivizes miners to (non-uniformly!) randomize their selection of transactions to include. This probabilistic approach “smooths out” the very sharp edges of fee markets we see on linear chains such as Bitcoin. This creates a fee market where users are incentivized to increase the fee by a meaningful amount even when the network is very far from being fully congested, providing a healthier fee market.

- Race to the bottom: as long as the network is not fully congested, there is no reason to pay any meaningful fee, as any transaction is guaranteed to be included.

- Bidding war: if the network is congested, it suffices to outbid the cheapest transaction to be included by a single satoshi to take its place. Users are not incentivized to increase their fees by a meaningful amount.

- Starvation: if a transaction pays low fee compared to those currently included in blocks, it would never be included as long as the fees remain high.

For simplicity, imagine a situation where each block can only contain one transaction, and that there are two equally large miner. Say that there are two transactions in the mempool, one paying a fee of 100 kas, and the other paying a fee of 90 kas. Which transaction should the miners include?

In a linear chain such as Bitcoin, this is a no-brainer: include the 100 kas transaction. If you are the first one to produce a block, you get 100 kas, otherwise, you get nothing. In DAGs this is no longer the case. Both miners can produce a parallel block, and in this case they have an equal probability to claim the transaction. That is, if both miners include the 100 kas transaction, then the expected profit for each one is 50 kas (as there is 50% chance of 100 kas and 50% chance of 0 kas). What happens if, instead, both miners flip a coin to choose what transaction to include? From the point of view of each miner, these are the possible outcomes:

- I chose the 100 kas transaction, and the other miner chose the 90 kas transaction. This has a probability ¼, and my profit is 100.

- I chose the 90 kas transaction, and the other miner chose the 100 kas transaction. This has probability ¼ and my profit is 100.

- We both chose the 100 kas transaction. This has probability ¼ and in this case there is a 50% chance I see a profit of 100 kas, so my expected profit is 50 kas.

- We both chose the 50 kas transaction. This has probability of ¼ and in this case there is a 50% chane I see a profit of 90 kas, so my expected profit is 45 kas.

Summing it all up, we see that the uniformly random selection strategy has an expected profit of 71.25 kas, which is more than 40% more profitable than always taking the highest paying transaction!

More deliberate analysis shows that uniformly random selection is still not optimal. The actual optimal strategy is too complicated to describe here, but the gist is that it samples a transaction from some probability that favours higher paying transactions, but also gives lower paying transactions a chance.

This has several positive implications to the fee market:

- (Almost) no race to the bottom: a race to the bottom only appears when the transaction count is so low that all blocks can include all current transactions. Say the network creates on average 10 blocks on parallel, and the network is at 11% of its full capacity. Then typically, when creating a block, there are more transactions to choose from than a single block could fit, incentivizing miners to use random selection. Since higher fee transactions have better chances of being included, users are incentivized at this point to increase fees despite the network being very far from congested.

- No bidding war: probabilistic inclusion does not admit the sharp threshold we see in linear chains. Since the probability of being included is a smooth function of the fees you pay, increasing the fee by a negligible amount only provides a negligible improvement. Users are incentivized to increase their fees in a way that reflects the quality of service they are expecting to receive.

- No starvation: say the network throughput is 3000 TPS, and there are 4000 transactions in the mempool. The least-paying transaction still has some chance of being included. Even if this chance is one in ten thousand, it would take about ten thousand blocks before it is included. In 10 BPS, this translates to about 20 minutes. That is, users have an option to pay a low fee if they do not mind waiting longer for inclusion.

References: parallel inclusion games were first fully analyzed by Lewenberg, Sompolinsky and Zohar in the Inclusive paper

Resources: transaction selection games in DAGs

Fast Based ZK-Rollups

Kaspa is the only chain performant and responsive enough to handle a large volume of based ZK-rollups, allowing for a large and diverse L2 ecosystem, while requiring L2s to sequence on chain, making them as secure as the L1, inheriting Kaspa’s security, censorship resistance, and MEV resistance. Furthermore, coupling L1 and L2 assures that L2s do not become parasitic.

Kaspa is the only chain performant and responsive enough to handle a large volume of based ZK-rollups, allowing for a large and diverse L2 ecosystem, while requiring L2s to sequence on chain, making them as secure as the L1, inheriting Kaspa’s security, censorship resistance, and MEV resistance. Furthermore, coupling L1 and L2 assures that L2s do not become parasitic. ZK-rollups are a very powerful tool for creating arbitrarily complex services – or L2s – over existing block chains. The rough idea is that the L2 performs arbitrary computations, that are committed to the L1 via zero-knowledge proofs. ZK-rollups come in two flavors: based rollups, that use the underlying chain to reach consensus, and unbased rollups, that reach consensus via an external mechanism and only use the L1 to commit to the result.

ZK-rollups are a very powerful tool for creating arbitrarily complex services – or L2s – over existing block chains. The rough idea is that the L2 performs arbitrary computations, that are committed to the L1 via zero-knowledge proofs. ZK-rollups come in two flavors: based rollups, that use the underlying chain to reach consensus, and unbased rollups, that reach consensus via an external mechanism and only use the L1 to commit to the result.

Based rollups have unquestionable benefits: since they use the underlying L1 to sequence, they inherit security properties such as censorship resistance. Additionally the L1 for sequencing means that L2 operations must be transmitted as L1 transactions (containing ZK proofs), tying the usage of the L2 to the L1, and preventing L2s from becoming parasitic.Furthermore, since the complicated sequencing process is delegated to the L1, developing a based rollup is considerably easier.

The main obstruction for based rollups, especially on proof-of-work, is that they require much more L1 throughput. While an unbased rollup can sequence its own computation, the state of the based rollup cannot progress without posting to the L1. Yes, arbitrarily complex computations can be condensed into a single ZK proof, but they won’t be actually sequenced and processed before the proof is posted to the L1.

On chains with limited throughput and large mempool delays, this makes the utility cost high and the chain progression erratic, making based rollups less practical. Kaspa’s unprecedented throughput can support a plethora of quick, cheap, and highly secure L1s.

Coming: Responsive Consensus

The upcoming DAGKnight protocol will be the first consensus protocol to respond to network conditions. This means that, for the first time, transactions could be confirmed as fast as the actual network, and not as fast as the network is at its slowest. Once implemented, Kaspa will become the fastest-confirming consensus protocol in existence.

The upcoming DAGKnight protocol will be the first consensus protocol to respond to network conditions. This means that, for the first time, transactions could be confirmed as fast as the actual network, and not as fast as the network is at its slowest. Once implemented, Kaspa will become the fastest-confirming consensus protocol in existence. Imagine you had to drive a car across the country, but you had to choose your travel speed in advance and your car would only go at that exact speed until you reach your destination. Despite most of the roads being freeways and turnpikes, you will only make it alive if you travel the entire way slow enough to make that narrow sharp edge over a cliff that happens to be in the way.

Imagine you had to drive a car across the country, but you had to choose your travel speed in advance and your car would only go at that exact speed until you reach your destination. Despite most of the roads being freeways and turnpikes, you will only make it alive if you travel the entire way slow enough to make that narrow sharp edge over a cliff that happens to be in the way.

Fixing the speed in advance might sound absurd, but it is actually how all consensus protocols work. The protocol designer must assume in advance what the network delay is. However, if they underestimate the delay and the network conditions deteriorate, driving the delay meaningfully above the estimation, the security of the network plummets very similarly to a car falling off a cliff.

This forces protocol designers to parametrize their protocol with a large margin of error, to remain secure against network deterioration, which essentially means that the confirmation times are slower than they could be because they are computed with respect to that delay. This is also evident in Kaspa: the security of the network remains intact as long as the proportion of red blocks does not go over 5%, yet almost always that proportion is much closer to zero. However, we cannot reduce the confirmation times by increasing the proportion of red blocks because a deterioration in network conditions will degrade the security.

Enter DAGKnigt, the first parameterless protocol that does not require the designer to estimate the network latency at all. DAGKnight allows each node operator to choose their own latency, and always targets the lowest latency possible that is currently still secure, making the world views of all nodes consistent. The actual protocol is much more complicated than that and borrows ideas from both the GHOSTDAG and SPECTRE protocols, but that is the gist.

How is this magic even possible? The key observation is that in proof-of-work there is no such thing as deterministic finality. You are never completely guaranteed that a transaction will never revert. You just know that as it accumulates confirmations, the probability it happens goes to zero very fast. So how close to zero is close enough? That’s up to the recipient, it is subjective. However, the traditional models used in the literature to analyze distributed systems assume that finality is deterministic. That means this model cannot even express protocols like DAGKnight.

This inspired a new model, where each participant is allowed to choose her desired level of confidence. This slight change of model suffices to prove the security of DAGKnight. Moreover, this leads to a conjecture that subjective finality is actually required for parameterlessness. If true, this implies that with DAGKnight, Kaspa will provide faster confirmation times than the fastest theoretically possible proof-of-stake (or any other deterministic form of sybilness) protocol.

References:

Resources:

- DAGKnight presentation in CESC22

- DAGKnight overview (Twitter)

- The oblivious consensus paradigm (Twitter)

Coming: Game Theoretic MEV Resistance and Oracles

Kaspa’s multi-leader consensus allows for a new and promising approach for handling MEV and implementing Oracles (ways to allow smart contracts to depend on external information). The idea is that, since there are many parallel blocks accepted in each round that compete over including the same transactions, the protocol could implement a bidding process, designed such that its equilibria benefit the users and not just the miners.

Kaspa’s multi-leader consensus allows for a new and promising approach for handling MEV and implementing Oracles (ways to allow smart contracts to depend on external information). The idea is that, since there are many parallel blocks accepted in each round that compete over including the same transactions, the protocol could implement a bidding process, designed such that its equilibria benefit the users and not just the miners. Most blockchains implement single leader consensus. Every round, many entities compete over the right to decide the next block (by mining, staking, and so on), but eventually, a single entity obtains complete control over the decision. This allows miners to use the dependence and meaning of transactions to include them in a way that extracts value. This is called miner extractable value, or MEV in short.

Most blockchains implement single leader consensus. Every round, many entities compete over the right to decide the next block (by mining, staking, and so on), but eventually, a single entity obtains complete control over the decision. This allows miners to use the dependence and meaning of transactions to include them in a way that extracts value. This is called miner extractable value, or MEV in short.Coming: Smart Contracts

Coming: Decentralized Development

Join & Follow the COmmunity

We are a growing community and are always looking for enthusiastic people to join the project. If you are a coder, marketer, vlogger, community manager, enthusiast, or anything else join the Kaspa Discord and say, “hi!”

FOLLOW US

PUBLIC DONATIONS ARE WELCOME!

Simple donation through Cryptocurrency Checkout

Manual donations dev-fund-address: kaspa:precqv0krj3r6uyyfa36ga7s0u9jct0v4wg8ctsfde2gkrsgwgw8jgxfzfc98

Mining dev-fund-address: kaspa:pzhh76qc82wzduvsrd9xh4zde9qhp0xc8rl7qu2mvl2e42uvdqt75zrcgpm00

The wallet is managed by 4 members of the community (msutton, Tim, The SheepCat and demisrael) who were publicly voted in to become the Treasurers.